As I’m sure many readers are aware by now, Visual Studio developers are about a month away from the second part of the Visual Studio 2005 release that includes a slew of new features that make a developer’s job easier. Visual Studio 2005 Team System.

In the first of this two part series I’ll describe a few of the specific problems you and your team probably face that served as the impetus for the creation of Visual Studio 2005 Team System (VSTS). In the next article I’ll get down to how VSTS is designed to address these issues. Taken together, the goal of these two articles is not simply to give you a laundry list of VSTS features, but rather to place them in the context of the problems they were designed to address.

Software Development is HardI don’t want to sound like a grumpy old man who sits in front of the barbershop longing for yesterday while muttering about failures of the modern world, but software development is hard and getting harder.

Gone are the simpler days of monolithic applications running on a single platform free from deployment issues. Gone are the heady days of client/server computing and the “enterprise data model” accessible through Visual Basic or Powerbuilder apps free from data sharing issues. And even gone are the days of simple data-driven ASP or ASP.NET web applications accessing our data behind our firewall. No, today in our ever more connected world, our solutions require access from multiple platforms, devices (heterogeneity), and networks, data sharing across the enterprise and with business partners (service orientation), and scalability on commodity hardware. All of this in an environment where the driving factors are less time and fewer resources.

The truth of this trend towards increasing complexity has driven many organizations to consider adopting the more loosely-coupled architectural pattern of service orientation, or a Service Oriented Architecture (SOA), made practical by the implementation and maturation of web services. And as with any architectural shift SOA and web service applications come a new set of design and implementation challenges that developers will need to address.

I know that this come as no surprise to many of you reading this article - you who have managed, designed, built, and tested complex enterprise solutions requiring heterogeneity, service orientation, and scalability. But I also know that in doing so many of you are looking for tools to make your jobs easier

So why is software development difficult? In short I see four primary reasons teams struggle to build quality software.

Communication BreakdownsThe reality is that in many cases IT teams are today both geographically and functionally distributed. This distribution creates gaps in communication that provide opportunities for issues to be dropped, misunderstandings to arise, and information flow to be slow and haphazard.

Fortunately as you’ll see in the next article, with VSTS the very technology that makes geographical distribution possible in many cases can also be applied to open up new channels of communication. And of course the benefits of tools enabling better communication aren’t restricted to geographically disparate teams. Even in small and local teams, crucial information is often dispersed and not easy to find or not captured in the first place. Tools that provide a centralized location for all of this information is in many ways the first step towards controlling the project.

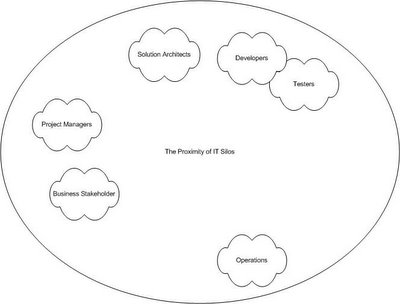

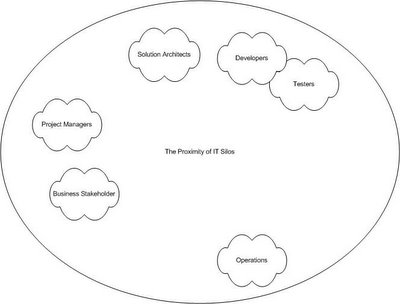

At the same time, as with much of the modern world, the IT industry has tended towards increasing specialization. Although in a small organization you may have to be a jack of all trades, in many organizations silos have developed where expertise in project management is restricted to one group while development resources come from another and solution architectures from a third. Because some of these groups share certain attributes and not others there are differing amounts of conceptual space between these groups as illustrated in Figure 1 where Developers and Testers are more closely related than Solution Architects and Project Managers. This specialization over time has the effect of producing conflicting best practices, architectures, and ultimately a vision for how software should be developed.

The Proximity of IT Silos

The Proximity of IT SilosAs an example of addressing the first need consider the case where a Solution Architect is designing a system all alone, safely ensconced in his architectural silo made of ivory. When his architecture is complete he hands it off to the developers who merrily code away. Job done. Not so fast. When it comes time to discuss how this solution will actually be deployed in production, the Solution Architect is shocked and dismayed to discover that Operations doesn’t support the server or communications configuration he was assuming for his “perfectly architected” solution. And of course Operations derives a certain guilty pleasure in crushing the ivory tower. His solution no longer looks so perfect and he then must rework the architecture or spend political capitol in trying to get the Operations policies changed, costing both time and development resources.

What our architect needed in this scenario was a tool that helped him map his solution architecture to the existing hardware and software environment including the Operations policy so that he could take it into consideration during his work. In the second article in this series you’ll see how VSTS addresses this problem through a suite of design tools.

Tools that enable information flow between these groups will go a long ways towards solving communication breakdowns.

Lack of PredictabilityThe Danish physicist Niels Bohr once said that

“prediction is very difficult, especially about the future”. I don’t think he had software development in mind but I do think it is applicable. This is borne out intuitively by the concern many have in the IT industry for being able to predict the success of projects. Their concern is not unfounded. As Steve McConnell writes in his 2004 book

Professional Software Development:

“Roughly 25 percent of all projects fail outright, and the typical project is 100 percent over budget at the point it’s cancelled. Fifty percent of projects are delivered late, over budget, or with less functionality than desired.”

Why do these projects end up over budget, late, feature-poor, or cancelled? In most cases it was because their teams couldn’t accurately predict or control the software development lifecycle. And at the heart of enabling better prediction in your projects lies metrics and repeatable practices.

The simple fact is that teams manage projects according to the metrics they are able to collect. If the metrics collected are the right ones the Project Manager and other stakeholders can quickly get a feel for where the project is headed, to better estimate its completion, and to actually drive it towards completion by analyzing risk and making course corrections when necessary. In addition, a key point that organizations often undervalue is that good (meaning real and not anecdotal) metrics on previous projects rather than personal memory, subjective intuition, or seat of the pants guesswork provide the best input into making estimates on future projects.

I would suggest that what project teams could use are tools that help them address the area of metrics in at least the following ways:

By suggesting predefined metrics taken from best practices that have been proven to provide insight into projects such as metrics related to progress towards the schedule, the stability of the plan, code quality, and measuring the effectiveness of testing. By offering a single place in which data to produce metrics is stored in order to eliminate the separate silos in which that data is stored today.By supporting an automatic way of collecting data in a timely fashion that is integrated into the natural workflow of the team. The second aspect of more accurately predicting the success of software projects lies in the adoption of repeatable or “best” practices. While we all know that one size does not fit all I also know that in almost any endeavor applying a structured or methodical approach reduces the variability in the outcomes.

Unfortunately, many teams have no set of repeatable or structured practices that they can hang their hats on and they can use to focus their efforts. For these teams, for example, a developer is simply tasked with implementing a feature and then left to his own devices. While some developers may naturally or through intentional analysis apply some set of structured principles to their development, many will not and the result is that the productivity of those developers and hence the predictability of both the software quality and the schedule are almost impossible to get a handle on. This is largely borne out by the oft-repeated finding that developers differ in their productivity on the order of 10 to 1 as noted by McConnell in

Professional Software Development. In other words, some developers are 10 times more productive than others. And just as the adoption of uniform approaches to training and conditioning has shrunk the variation in times between competitors in athletic competitions such as track and field, I think at least part of the difference in the productivity of software developers can be overcome by applying repeatable and proven practices to the software development process.

Tools can help in this regard by integrating structured practices into the normal workflow of developers, for example, by including a unit testing framework.

Lack of IntegrationThe primary cause of the problem is that over time many organizations have accumulated a collection of software development lifecycle (SDLC) tools through purchase, acquisition or merger, or even custom development. Unfortunately, in most cases these tools weren’t designed to be used together and are not integrated with the IDEs in use in the organization. As a result, this lack of integration can manifest itself in myriad ways but often bubbles to the surface as follows:

The data collected by the tools they use in their SDLC exist in separate silos and is not easily integrated or related to data from other tools.The tools they use don’t provide a platform and device independent way to implement extensibility and custom tools.Using “best of breed” tools forces their team members to context-switch between tools in order to perform functions of their process.The tools they use don’t know about the policies or constraints of their process. In other words, their tools cannot interact in any meaningful way with the processes they do have.Obviously, the size of the overall integration problem is more often than not proportional to the size of the organization you’re in. However, even small organizations are not immune from integration issues as even a single relied upon tool that isn’t integrated can manifest all of the above issues.

One approach to this problem, and that taken by VSTS as you’ll see in the next article, would be to integrate the lifecycle tools directly into the software that team members use in their day to day work. For example, rather than require a developer to go to a web site to too see which features he’s been assigned why not make those features visible from inside his IDE? And further, why not have the tool associate the feature with the actual source code and unit tests he’s working on so that metrics can be automatically collected on the implementation of the task.

A second way in which lack of integration rears its ugly head is when tools don’t work together to surface or expose policies adopted by the organization. Typically these policies or guidelines that attempt to regulate the software development process exist only in three-ring binders or if you’re fortunate on an Intranet. Unfortunately, in both cases out of sight is out of mind and so the policies end up being enforced across the organization only sporadically if at all.

What is needed is for tools to enforce policies dynamically during the regular work flow of team members. One of the bets Microsoft is making with VSTS is that once development tools automate process guidance, then most of the overhead associated with the process as well as the majority of the resistance to compliance will evaporate.

It is this kind of deep integration, both in terms of your team member’s workflow and tools, can improve the productivity of you teams and the quality of the software you produce.

Lack of Support for the ProcessTrying to coordinate the people, geographies, roles, and tools involved in projects while at the same time tracking and making predictions about the interaction of these aspects would be a challenge for anyone. So how do teams solve these sorts of problems and raise their level of success?

The standard answer to that question has been to adopt a software development methodology such as the Rational Unified Process (RUP) or Extreme Programming (XP) or a customized version of one of these or other standard approaches. While this approach can be and often is effective, most developers don’t have access to tools that surface or provide visibility into the process so that they can more easily follow it. And because of the lack of tools support, the perceived complexity of some of these methodologies and simple inertia, some teams simply haven’t adopted any methodology or process at all. Unfortunately, these teams must instead fly by the seat of their pants much of the time. What is needed are tools that provide the overarching structure for projects in a way that teams can flexibly integrate their own process or use a predefined process that guides them on their way.

Because many teams (primarily those in smaller organizations who don’t have the resources or expertise to implement a rigorous process) have not adopted a software development process there is ample opportunity for tools to provide guidance and therefore improve the quality and timeliness of software produced by these teams.

Of course, the majority of larger organizations have already adopted a methodology and so their challenge is in making their process visible through the tools they use.

What is needed is for tools to allow for the customization of boiler plate processes or the building of custom processes that integrate with the tools.

Summing it UpIn this article I’ve discussed a number of common problems teams face when developing solutions in the increasingly complex and constrained world of software development and hinted at how VSTS will address them.

What is needed in order to solve these kinds of problems what is needed is not just another upgrade of their integrated development environment, but instead an “integrated services environment” that encompasses the entire extended software development team.

At its core this integrated services environment, in order to be successful, needs to accomplish three things:

Reduce the complexity of delivering modern service-oriented solutionsBe tightly integrated and facilitate better team collaboration and communicationEnable customization and extensibility by organizations and ISVs Exactly how VSTS is constructed to make this happen will have to wait for the next article.