Localizing Services

Since our service-oriented infrastructure is meant to be extended for use by partner countries we architected it so that different service consumer's culture settings could be taken into account.

As discussed in my post on Messaging Standards this meant incorporating into our standard SOAP header the Culture and UICulture elements defined as follows:

In order to set the culture and to return messages in the appropriate language we then had to effect several areas of our code.

ServiceAgentBase

From the service consumer's perspective a call to a service operation can be made through a Service Agent using our Service Agent Framework. The layer supertype in that framework is our ServiceAgentBase class from which all service agents are defined.

When a method on one of the derived service agent classes is called, the implementation delegates much of the work to a protected method of the ServiceAgentBase such as ExecuteOperation. This method is then responsible for building the SOAP request, choosing a transport, and then sending the request and receiving the response.

In order to build the SOAP message the method uses a set of schema classes we generated using XSD.exe and then modified. For example, we have a class simply called Compassion.Schemas.Common.SoapHeader that encapsulates our header schema. In that class we have the following:

public class SoapHeader

{

private string _culture =

Thread.CurrentThread.CurrentCulture.Name;

private string _uiculture =

Thread.CurrentThread.CurrentUICulture.Name;

...

}

As a result, clients who instantiate service agents can first set the culture on the current thread (typically the culture is already set of course) before doing so and thereby transmit the culture through the SOAP envelope.

Thread.CurrentThread.CurrentCulture =

new CultureInfo("de-DE");

Thread.CurrentThread.CurrentUICulture =

new CultureInfo("de-DE");

// Create the update request

ConstituentUpdateRequest req = CreateRequest();

// Create the service agent

ConstituentServiceAgent agent = new ConstituentServiceAgent

("Compassion.Services.Agent.Constituent.Tests.Unit");

// Send the request and get the response

ConstituentUpdateResponse resp =

agent.UpdateConstituent(req);

In this way, by using the service agent consumers will automatically get consideration for their culture when service operations are processed.

WebService Transport

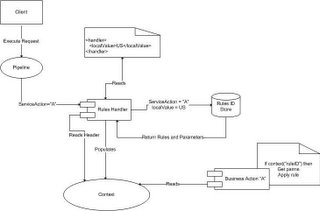

When the SOAP message reaches the server EDAF intercepts the request and processes through one of its transports. Thus far we've used the WebService transport exclusively and so we modified the standard code to be aware of our SOAP headers.

Within the WebServiceInterfaceAdapter.cs file we added code to process our SOAP headers if the headers match our namespace. If so the code unpacks the header and then builds a CIHeader object which it then places in the EDAF Context object like so:

// Create the header if it doesn't exist yet

if (h == null) { h = new csc.CIHeader();}

switch (sun.Element.LocalName)

{

case "Culture":

{

h.Culture = sun.Element.InnerText;

break;

}

case "UICulture":

{

h.UICulture = sun.Element.InnerText;

break;

}

...

}

//Add the header to the context

_context.Add("ciHeader",h,false);

One of the interesting aspects of this is that if the WebServiceInterfaceAdapter does not find the culture settings in the SOAP header it will try and read them from the underlying transport itself, in this case HTTP like so:

if (h.UICulture == null)

{

// See if you can find the culture in the HTTP header

if (HttpContext.Current.Request.UserLanguages !=null)

{

if

(HttpContext.Current.Request.UserLanguages.Length > 0)

{

// Take the first one in the collection

h.UICulture =

HttpContext.Current.Request.UserLanguages

[0].ToString();

if (h.Culture == null)

{

h.Culture =

HttpContext.Current.Request.UserLanguages

[0].ToString();

}

}

}

}

At this point the culture is now captured on the service provider side.

BusinessActionBase

After the request passes through the adapter and any handlers that have been configured it makes it's way to the business action where the actual work is done. All of our business actions are derived from our BusinessActionBase class which as you might expect, contains the code to read the culture information and use the .NET ResourceManager to allow the derived class to pick up the correct strings.

The supertype does this by simply examining the CIHeader object in the Context if it exists and then pulling the values out.

//try to pull it from the ciHeader.

if (Header != null)

{

if ((Header.Culture != null) &&

(Header.Culture.Trim().Length>0))

culture = Header.Culture;

if ((Header.UICulture != null) &&

(Header.UICulture.Trim().Length>0))

uiCulture = Header.UICulture;

}

//try to pull it from the app settings.

if (culture==string.Empty)

culture = ConfigurationSettings.AppSettings["Culture"];

if (uiCulture==string.Empty)

uiCulture = ConfigurationSettings.AppSettings

["UICulture"];

//call SetCulture

SetCulture("Strings",

this.GetType().Assembly,strCulture,strUICulture);

You'll also notice that this code includes a last ditch effort to pull the culture settings from the application's configuration file. This allows the implementer of the service to set a default culture other than the neutral culture.

The last line of code above calls the SetCulture method in order to actually set the culture. The key part of the SetCulture method sets the current thread's culture to the codes passed in and instantiates the ResourceManager that is then exposed via a protected property.

Thread.CurrentThread.CurrentCulture = new

CultureInfo(strCultureCode);

Thread.CurrentThread.CurrentUICulture = new

CultureInfo(strUICultureCode);

m_objResourceManager = new ResourceManager

(strResourceFileBaseName,objCaller);

The end result is that dervied business actions use the exposed resource manager to return exception and other messages to the client in their own language. For example, when an exception is raised the business action would code like the following in order to return an exception code and message to the caller.

exception.Message =

this.ResourceManager.GetString("MissingAddress");

exception.Code=

this.ResourceManager.GetString("MissingAddressCode");